Configuring Apache Kafka Monitoring, Version 5.2

This section describes the required configurations as well as the optional configurations available for the Solution Package for Apache Kafka in the RTView Configuration Application. You must define the classpath to the Apache Kafka jar files and you must also define data source connections for each connection that you want to monitor.

Note: In order to monitor your Kafka brokers, consumers, zookeepers, and producers via JMX, additional options should be passed to the JVMs. See Additional Required Kafka Broker and Client Setup for more information.

Configuring Data Collection

Note: See Creating Secure Connections for additional information on creating secure connections for Apache Kafka.

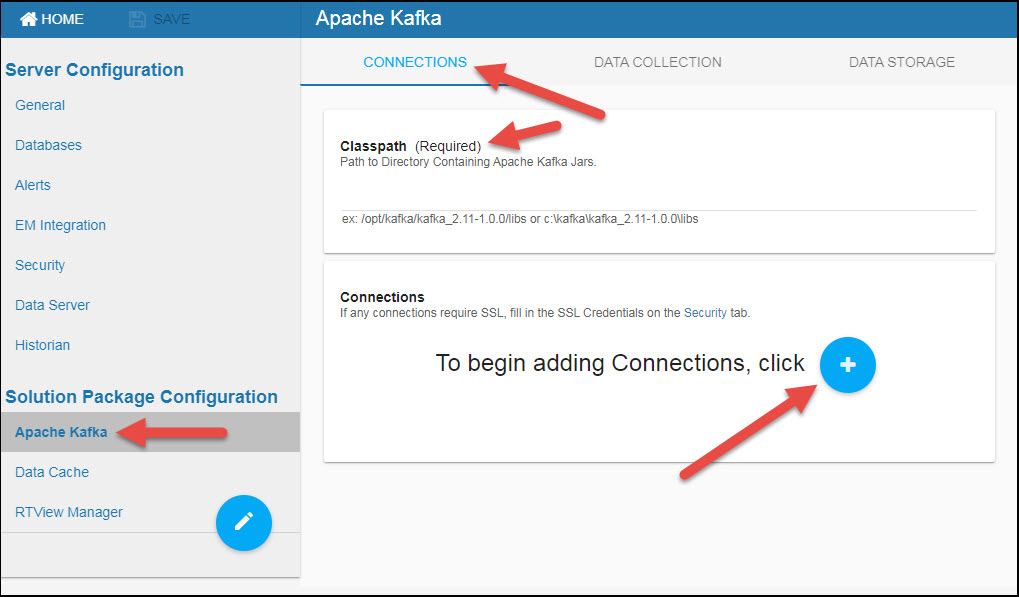

- Click Solution Package Configuration > Apache Kafka.

The CONNECTIONS tab displays.

Note: No setup is required for Solution Package Configuration > RTView Manager or Data Cache.

- Enter the correct full path to the directory containing the Apache Kafka jar files in the Classpath field.

- Click the

button.

button.

The Add Connection dialog displays.

- Add your desired connections and click the SAVE button after each new connection. You must add at least one Zookeeper and one Broker connection.

Broker Connection

Connection Name: The name of the connection/server.

Connection Type: Select Broker from this drop down list.

Host: The IP address of the host.

JMX Port: The JMX port used when connecting.

Username: The username is used when creating the connection. This field is optional (if no username/password is set for the broker).

Password: This password is used when creating the connection. This field is optional (if no username/password is set for the broker). By default, the password entered is hidden. Click the associated "eye" icon to view the password text.

Cluster Name: The name of the cluster.

Topic Names: Optionally enter one or more topics to restrict monitoring to only those topics on the broker (all topics are monitored by default). You can enter multiple topics by adding a comma or clicking the Tab key after each. Once entered, you can click the X next to the topic name to remove them.

Client Port: Enter the Client Port to use this broker to monitor topic partitions for this cluster.

Consumer Connection

Connection Name: The name of the connection/server.

Connection Type: Select Consumer from this drop down list.

Host: The IP address of the host.

JMX Port: The JMX port used when connecting.

Username: The username is used when creating the connection. This field is optional (if no username/password is set for the consumer).

Password: This password is used when creating the connection. This field is optional (if no username/password is set for the consumer). By default, the password entered is hidden. Click the associated "eye" icon to view the password text.

Cluster Name: The name of the cluster.

Producer Connection

Connection Name: The name of the connection/server.

Connection Type: Select Producer from this drop down list.

Host: The IP address of the host.

JMX Port: The JMX port used when connecting.

Username: The username is used when creating the connection. This field is optional (if no username/password is set for the producer).

Password: This password is used when creating the connection. This field is optional (if no username/password is set for the producer). By default, the password entered is hidden. Click the associated "eye" icon to view the password text.

Cluster Name: The name of the cluster.

Zookeeper Connection

Connection Name: The name of the connection/server.

Connection Type: Select Zookeeper from this drop down list.

Client Port: Optionally enter the Client Port if you want to monitor topic partitions for this cluster.

Host: The IP address of the host.

JMX Port: The JMX port used when connecting.

Username: The username is used when creating the connection. This field is optional (if no username/password is set for the zookeeper).

Password: This password is used when creating the connection. This field is optional (if no username/password is set for the zookeeper). By default, the password entered is hidden. Click the associated "eye" icon to view the password text.

Cluster Name: The name of the cluster.

- You can optionally modify the Poll Rates (query interval, in seconds) that will be used to collect the metric data for various general and disk usage caches in Solution Package Configuration > Apache Kafka > DATA COLLECTION > Poll Rates.

Configuring Historical Data Collection (Optional)

You can specify the number of history rows to store in memory, the compaction rules, the duration before metrics are expired and deleted, and the different types of metrics that you want the Historian to store in the DATA STORAGE tab in the RTView Configuration Application.

Defining the Storage of In Memory History

You can modify the maximum number of history rows to store in memory in the DATA STORAGE tab. The History Rows property defines the maximum number of rows to store for the KafkaZookeeper, KafkaServer, KafkaConsumer, KafkaProducer, KafkaServerMetrics, KafkaServerHistogram, KafkaServerTimer, KafkaServerTopicMetrics, KafkaTopicPartition, KafkaTopicTotalsByTopic, KafkaTopicTotalsByServer, KafkaTopicTotalsByConsumer, KafkaTopicTotalsByTopicAndServer, and KafkaTopicTotalsByTopicAndConsumer caches. The default settings for History Rows is 50,000. To update the default settings:

- Navigate to the Solution Package Configuration > Apache Kafka > DATA STORAGE tab.

- In the Size region, click the History Rows field and specify the desired number of rows.

Defining Compaction Rules

Data compaction, essentially, is taking large quantities of data and condensing it using a defined rule so that you store a reasonably sized sample of data instead of all of your data, thus preventing you from potentially overloading your database. The available fields are:

Condense Interval – The time interval at which the cache history is condensed for the following caches: KafkaZookeeper, KafkaServer, KafkaConsumer, KafkaProducer, KafkaServerMetrics, KafkaServerHistogram, KafkaServerTimer, KafkaServerTopicMetrics, KafkaTopicPartition, KafkaTopicTotalsByTopic, KafkaTopicTotalsByServer, KafkaTopicTotalsByConsumer, KafkaTopicTotalsByTopicAndServer, and KafkaTopicTotalsByTopicAndConsumer. The default is 60 seconds.

Condense Raw Time -- The time span of raw data kept in the cache history table for the following caches: KafkaZookeeper, KafkaServer, KafkaConsumer, KafkaProducer, KafkaServerMetrics, KafkaServerHistogram, KafkaServerTimer, KafkaServerTopicMetrics, KafkaTopicPartition, KafkaTopicTotalsByTopic, KafkaTopicTotalsByServer, KafkaTopicTotalsByConsumer, KafkaTopicTotalsByTopicAndServer, and KafkaTopicTotalsByTopicAndConsumer. The default is 1200 seconds.

History Time Span -- The duration of time to retain a row of cached data based on its timestamp.

The cache trims its History table by removing rows with timestamps that are older than the limit specified here. Specify the duration in seconds or specify a number followed by a single character indicating the desired time interval (for example, 15m for 15 minutes). The format is a number followed by one of the following valid characters:

y - years (365 days)

M - months (31 days)

w - weeks (7 days)

d - days

h - hours

m - minutes

s - seconds

Example: 1M

Note that this setting only determines the duration of rows kept in the History table by the cache data source. It does not affect database storage, if any, associated with the cache.

The caches impacted by this field are: KafkaZookeeper, KafkaServer, KafkaRegisteredBrokers, KafkaConsumer, KafkaProducer, KafkaServerMetrics, KafkaServerHistogram, KafkaServerTimer, KafkaServerTopicMetrics, KafkaTopicPartition, KafkaTopicTotalsByTopic, KafkaTopicTotalsByServer, KafkaTopicTotalsByConsumer, KafkaTopicTotalsByTopicAndServer, and KafkaTopicTotalsByTopicAndConsumer

Compaction Rules: This field defines the rules used to condense your historical data in the database for the following caches: KafkaZookeeper, KafkaServer, KafkaConsumer, KafkaProducer, KafkaServerMetrics, KafkaServerHistogram, KafkaServerTimer, KafkaServerTopicMetrics, KafkaTopicPartition, KafkaTopicTotalsByTopic, KafkaTopicTotalsByServer, KafkaTopicTotalsByConsumer, KafkaTopicTotalsByTopicAndServer, and KafkaTopicTotalsByTopicAndConsumer. By default, the columns kept in history will be aggregated by averaging rows with the following rule 1h -;1d 5m;2w 15m, which means the data from 1 hour will not be aggregated (1h - rule), the data over a period of 1 day will be aggregated every 5 minutes (1d 5m rule), and the data over a period of 2 weeks old will be aggregated every 15 minutes (2w 15m rule).

To update these settings:

- Navigate to the Solution Package Configuration > Apache Kafka > DATA STORAGE tab.

- In the Compaction region, click the Condense Interval, Condense Raw Time, History Time Span, and Compaction Rules fields and specify the desired settings.

Note: When you click in the Compaction Rules field, the Copy default text to clipboard link appears, which allows you copy the default text (that appears in the field) and paste it into the field. This allows you to easily edit the string rather than creating the string from scratch.

Defining Expiration and Deletion Duration for Apache Kafka Metrics

The data for each metric is stored in a specific cache and, when the data is not updated in a certain period of time, that data will either be marked as expired or, if it has been an extended period of time, it will be deleted from the cache altogether. By default, metric data will be set to expired when the data in the cache has not been updated within 45 seconds. Also, by default, if the data has not been updated in the cache within 3600 seconds, it will be removed from the cache.

The caches impacted by the Expire Time and Delete Time properties are: KafkaZookeeper, KafkaServer, KafkaRegisteredBrokers, KafkaConsumer, KafkaProducer, KafkaServerMetrics, KafkaServerHistogram, KafkaServerTimer, KafkaServerTopicMetrics, KafkaTopicPartition, KafkaTopicTotalsByTopic, KafkaTopicTotalsByServer, KafkaTopicTotalsByConsumer, KafkaTopicTotalsByTopicAndServer, and KafkaTopicTotalsByTopicAndConsumer. To modify these defaults:

- Navigate to the Solution Package Configuration > Apache Kafka > DATA STORAGE tab.

- In the Duration region, click the Expire Time and Delete Time fields and specify the desired settings.

Enabling/Disabling Storage of Historical Data

The History Storage section allows you to select which metrics you want the Historian to store in the history database. By default, all historical data (in the KafkaServer, KafkaServerTopicMetrics, KafkaServerMetrics, KafkaServerHistogram, KafkaServerTimer, KafkaZookeeper, KafkaConsumer, KafkaProducer, KafkaTopicPartition, KafkaTopicTotalsByTopic, KafkaTopicTotalsByServer, KafkaTopicTotalsByConsumer, KafkaTopicTotalsByTopicAndServer, and KafkaTopicTotalsByTopicAndConsumer caches) is saved to the database. To disable the collection of historical data, perform the following steps:

- Navigate to the Solution Package Configuration > Apache Kafka > DATA STORAGE tab.

- In the History Storage region, select the toggles for the various metrics that you want to collect. Blue is enabled, gray is disabled.

Defining a Prefix for All History Table Names for Apache Kafka Metrics

The History Table Name Prefix field allows you to define a prefix that will be added to the database table names so that the Monitor can differentiate history data between data servers when you have multiple data servers with corresponding Historians using the same solution package(s) and database. In this case, each Historian needs to save to a different table, otherwise the corresponding data server will load metrics from both Historians on startup. Once you have defined the History Table Name Prefix, you will need to create the corresponding tables in your database as follows:

- Locate the .sql template for your database under rtvapm/kafkamon/dbconfig and make a copy of template.

- Add the value you entered for the History Table Name Prefix to the beginning of all table names in the copied .sql template.

- Use the copied .sql template to create the tables in your database.

Note: If you are using Oracle for your Historian Database, you must limit the History Table Name Prefix to 2 characters because Oracle does not allow table names greater than 30 characters (and the longest table name for the solution package is 28 characters).

To add the prefix:

- Navigate to the Solution Package Configuration > Apache Kafka > DATA STORAGE tab.

- Click on the History Table Name Prefix field and enter the desired prefix name.